Cloud services help to automate simple and complex processes. For analyzing a picture, the Microsoft Cognitive Services offer a bunch of services like the Vision API, (Custom) Computer Vision, Face API, Video Indexer an more. Follow this first sample with Postman to start with the Vision API.

Azure Cognitive Services can be integrated easily in custom processes and apps. A good starting point is Cognitive Services APIs web page on Azure.

The goal here is to analyze the content of a picture, in this sample a shiny motorcycle photo.

So, we just need some Azure logic and a tool to send and get the data. Let's start.

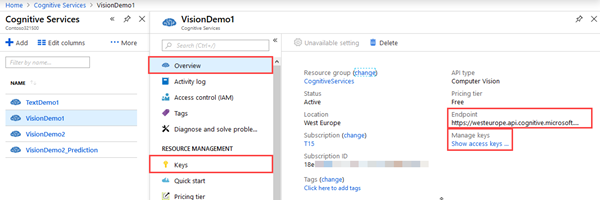

Computer Vision API - v1.0 informs about the service we want to consume (even if we are using v2). First, we need to create a new Cognitive Service and to get our own access data from the Azure Portal. Once created, we get that data in the service overview and in the keys menu.

Then, calling the Computer Vision API is easy: We need a HTTP POST request against the API and modify just the region and the subscription key.

https://<region>.api.cognitive.microsoft.com/vision/v2.0/analyze?visualFeatures=Description,Tags&subscription-key=<subscription-key>

So, my sample starts with https://westeurope.api.cognitive.microsoft.com/vision/v2.0/analyze...

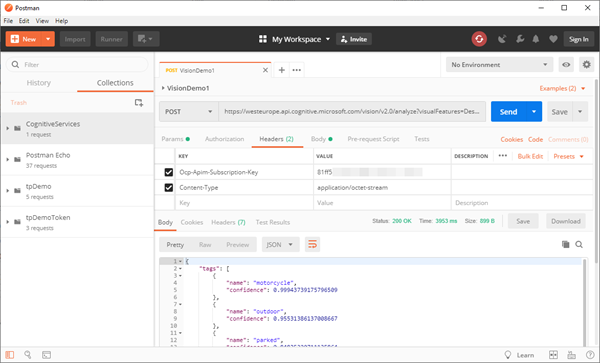

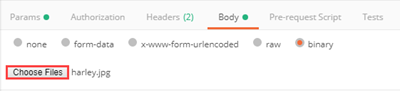

Postman is an excellent and free tool for testing. Here, we just create a new POST request with that URL and some parameters as here:

As Headers we add a key "Content-Type" with value "application/octet-stream" and the image that shall be uploaded with that post.

When clicking on the "Send" button, the request should be sent to the API. After some seconds of analyzing, the result will be shown as body, similar as here. The return data says, it's a 99% chance that the photo shows a motorcycle, it's 95% outdoor, it's 94% parked, a description "a motorcycle parked on the side" and more details. Here's the full output in JSON format.

{

"tags": [

{

"name": "motorcycle",

"confidence": 0.99943739175796509

},

{

"name": "outdoor",

"confidence": 0.95531386137008667

},

{

"name": "parked",

"confidence": 0.84835320711135864

},

{

"name": "motorbike",

"confidence": 0.84835320711135864

}

],

"description": {

"tags": [

"motorcycle",

"outdoor",

"parked",

"sitting",

"standing",

"field",

"black",

"side",

"man",

"people",

"woman",

"old",

"group",

"table",

"red",

"plate",

"display",

"blue"

],

"captions": [

{

"text": "a motorcycle parked on the side",

"confidence": 0.86568715712148681

}

]

},

"requestId": "4870258f-f470-4520-8806-9ec23a30bb35",

"metadata": {

"width": 3072,

"height": 1728,

"format": "Jpeg"

}

}

The Vision API understands more visualFeatures. So we can extend that parameter with these values - what are you interested in?

- Categories - categorizes image content according to a taxonomy defined in documentation.

- Tags - tags the image with a detailed list of words related to the image content.

- Description - describes the image content with a complete English sentence.

- Faces - detects if faces are present. If present, generate coordinates, gender and age.

- ImageType - detects if image is clipart or a line drawing.

- Color - determines the accent color, dominant color, and whether an image is black&white.

- Adult - detects if the image is pornographic in nature (depicts nudity or a sex act). Sexually suggestive content is also detected.

Also, details can deliver more information.

- Celebrities - identifies celebrities if detected in the image.

- Landmarks - identifies landmarks if detected in the image.

To get all these information, use the parameters as here:

?visualFeatures=Categories,Tags,Description,Faces,ImageType,Color,Adult&details=Celebrities

Then, we get back information as e.g. "dominantColorForeground": "Black", "isRacyContent": false and "adultScore": 0.01571008563041687, which means, that the percentage of adult content included in that picture is only 1 percent.

So, the services delivers a lot of useful information with a simple call to the API. The next steps are to integrate that service in custom processes or apps! The playground is open!